How to Build an Intelligent Machine | Louis-Philippe Morency

Can computers help diagnose depression? Louis-Philippe Morency, from Carnegie Mellon University, thinks they can. He’s been working at enabling machines to understand and analyze subtle human behavior that can betray sadness and happiness. In this video for the World Economic Forum, Morency introduces SimSensei - a virtual human platform specifically designed for healthcare support - and explains why he hopes future intelligent machines will work alongside doctors as colleagues.

Click on the video link for the full presentation, or read some quotes below.

On detecting subtle communication

“Let's look at one of the most intelligent machines - the human. Us. And if you look at one of the most important factors of the development of humans, it is communication. Really early on we communicate our happiness, our surprise, even our sadness. We do it through our gesture. Communication is a core aspect between mother and child, and we develop these communication skills through all our life.”

“Communication is done through three main modalities. The three “Vs”: the verbal, the vocal and the visual. The first the first one is the verbal. When we communicate we decide on specific words; their meaning is important, but every word can have really subtle changes to it. Even a word like “okay”. This subtlety of human communication is so powerful, and makes communication efficient and meetings possible. The next “V” is the visual - so a lot of what we do is to my gestures, my facial expression, to my posture.

All of these “V's” are essential to human communication, so how are we communicating with computers? We have keyboards and mouse, and also these days we have newer technology like touch screens. And we're getting closer, but it's much further than with human communication. When we think about machine human communication, being able to understand and communicate with humans in a natural way becomes important. This is true for embodied interaction machine like robots. But it is also true for non-embodied interaction machine, like your cell phones or computers. This capability of intelligent machines to understand the sovereignty of human communication is what is exciting to the scientific community.”

On developing new technology

“We are able to go from a really shallow interpretation of human communication to a much deeper understanding; going from understanding only the facial landmark motion of eyebrows, to a level where we also understand subtlety in the appearance of a face. We have got to the point where we can study all 48 muscles of the face and from this infer the expression of emotion. We're getting closer to deeper interpretation.”

“One of the key aspects is that we're looking at multiple levels, and we are now there is now a lot more information freely available. And so we can go from small data to Big Data, and a lot of examples of people communicating, not just in the laboratory but in different scenarios, different cultures and different individuals.”

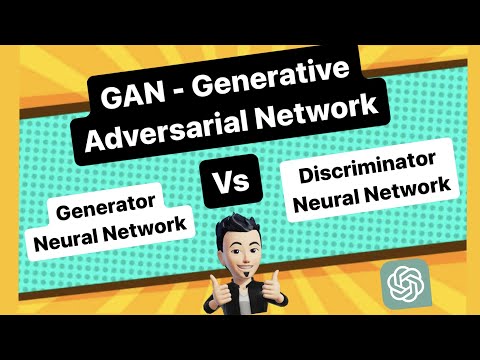

“The last piece is that the algorithms are getting a lot smarter, and we can handle a lot more of this data. So multimodal artificial intelligence is one of the key components in an intelligent machine, and that's also what allows us to think what do we want these intelligent machine to be or to do.”

On taking the interaction test

“Right now I can command my cell phone to do something, but I believe that computers are lot more about being colleagues and co-workers. For example they can help during diagnosis of a mental health disorder, by giving feedback to the doctor. Let me give you an example of a first step toward these intelligent machines: Ellie is there to help doctors during the assessment of mental health. She is not a virtual doctor, she is an assistant - an interviewer gathering information. And when we are looking at depression, or distress in general, it is a challenging thing to successfully diagnose. A doctor has the intuition about a disease, and they will do a blood test and then look at the details. For mental health we now have the possibility of doing an interaction sample. For instance, asking how did that person change over time? So maybe we can reduce the treatment and change the medication.”

“Let me introduce an interaction and show how she can interact in real-time and perceive their visual and vocal cues. We had more than 250 people interacting with our virtual human platform SimSensei, and what was interesting was that in just over a period of a few weeks, the interaction was supposed to be only 15 minutes. But people enjoyed talking with her, so she talked for 30 or 40 minutes. In fact if you look at how much sadness or real behavior they show, they share more when they’re interacting with the intelligent machine, then when they think there's a human behind pressing the button.”

Can computers help diagnose depression? Louis-Philippe Morency, from Carnegie Mellon University, thinks they can. He’s been working at enabling machines to understand and analyze subtle human behavior that can betray sadness and happiness. In this video for the World Economic Forum, Morency introduces SimSensei – a virtual human platform specifically designed for healthcare support – and explains why he hopes future intelligent machines will work alongside doctors as colleagues.

Click on the video link for the full presentation, or read some quotes below.

On detecting subtle communication

“Let’s look at one of the most intelligent machines – the human. Us. And if you look at one of the most important factors of the development of humans, it is communication. Really early on we communicate our happiness, our surprise, even our sadness. We do it through our gesture. Communication is a core aspect between mother and child, and we develop these communication skills through all our life.”

“Communication is done through three main modalities. The three “Vs”: the verbal, the vocal and the visual. The first the first one is the verbal. When we communicate we decide on specific words; their meaning is important, but every word can have really subtle changes to it. Even a word like “okay”. This subtlety of human communication is so powerful, and makes communication efficient and meetings possible. The next “V” is the visual – so a lot of what we do is to my gestures, my facial expression, to my posture.

All of these “V’s” are essential to human communication, so how are we communicating with computers? We have keyboards and mouse, and also these days we have newer technology like touch screens. And we’re getting closer, but it’s much further than with human communication. When we think about machine human communication, being able to understand and communicate with humans in a natural way becomes important. This is true for embodied interaction machine like robots. But it is also true for non-embodied interaction machine, like your cell phones or computers. This capability of intelligent machines to understand the sovereignty of human communication is what is exciting to the scientific community.”

On developing new technology

“We are able to go from a really shallow interpretation of human communication to a much deeper understanding; going from understanding only the facial landmark motion of eyebrows, to a level where we also understand subtlety in the appearance of a face. We have got to the point where we can study all 48 muscles of the face and from this infer the expression of emotion. We’re getting closer to deeper interpretation.”

“One of the key aspects is that we’re looking at multiple levels, and we are now there is now a lot more information freely available. And so we can go from small data to Big Data, and a lot of examples of people communicating, not just in the laboratory but in different scenarios, different cultures and different individuals.”

“The last piece is that the algorithms are getting a lot smarter, and we can handle a lot more of this data. So multimodal artificial intelligence is one of the key components in an intelligent machine, and that’s also what allows us to think what do we want these intelligent machine to be or to do.”

On taking the interaction test

“Right now I can command my cell phone to do something, but I believe that computers are lot more about being colleagues and co-workers. For example they can help during diagnosis of a mental health disorder, by giving feedback to the doctor. Let me give you an example of a first step toward these intelligent machines: Ellie is there to help doctors during the assessment of mental health. She is not a virtual doctor, she is an assistant – an interviewer gathering information. And when we are looking at depression, or distress in general, it is a challenging thing to successfully diagnose. A doctor has the intuition about a disease, and they will do a blood test and then look at the details. For mental health we now have the possibility of doing an interaction sample. For instance, asking how did that person change over time? So maybe we can reduce the treatment and change the medication.”

“Let me introduce an interaction and show how she can interact in real-time and perceive their visual and vocal cues. We had more than 250 people interacting with our virtual human platform SimSensei, and what was interesting was that in just over a period of a few weeks, the interaction was supposed to be only 15 minutes. But people enjoyed talking with her, so she talked for 30 or 40 minutes. In fact if you look at how much sadness or real behavior they show, they share more when they’re interacting with the intelligent machine, then when they think there’s a human behind pressing the button.”